-

- **This reader favorite recipe is included in [The Wholesome Yum Easy Keto Cookbook](https://www.wholesomeyum.com/cookbook/)!** Order your copy to get 100 easy keto recipes in a beautiful print hardcover book, including **80 exclusive recipes not found anywhere else** (not even this blog!), my complete fathead dough guide, the primer for starting keto, and much more.

-

- [ORDER THE EASY KETO COOKBOOK HERE](https://www.wholesomeyum.com/cookbook/)

-- RECIPE CARD

-

-

-

- **This reader favorite recipe is included in [The Wholesome Yum Easy Keto Cookbook](https://www.wholesomeyum.com/cookbook/)!** Order your copy to get 100 easy keto recipes in a beautiful print hardcover book, including **80 exclusive recipes not found anywhere else** (not even this blog!), my complete fathead dough guide, the primer for starting keto, and much more.

-

- [ORDER THE EASY KETO COOKBOOK HERE](https://www.wholesomeyum.com/cookbook/)

-- RECIPE CARD

-

-  -

- Rate this recipe:

-

- [](#respond)

-- Low Carb Paleo Keto Almond Flour Biscuits Recipe - 4 Ingredients

-

- This paleo almond flour biscuits recipe needs just 4 common ingredients & 10 minutes prep. These buttery low carb keto biscuits will become your favorite!

-

- Prep Time 10 minutes

-

- Cook Time 15 minutes

-

- Total Time 25 minutes

- - Recipe Video

-

- *Click or tap on the image below to play the video. It's the easiest way to learn how to make this recipe!*

- - Ingredients

-

- *Click underlined ingredients to see where to get them.*

- Please ensure Safari reader mode is OFF to view ingredients.

-

- [](https://www.wholesomeyumfoods.com/product-category/flours/)

-- Get The Best Flour For This Recipe

-

- Meet Wholesome Yum Blanched Almond Flour and Coconut Flour, with the highest quality + super fine texture, for the best tasting baked goods.

-

- [GET FLOURS](https://www.wholesomeyumfoods.com/product-category/flours/)

- - Instructions

-

- **Get RECIPE TIPS in the post above, nutrition info + recipe notes below!**

-

- *Click on the times in the instructions below to start a kitchen timer while you cook.*

-

- 1. Preheat the oven to 350 degrees F (177 degrees C). Line a baking sheet with parchment paper.

-

- 2. Mix dry almond flour, baking powder, and sea salt together in a large bowl. Stir in whisked egg, melted butter, and sour cream, if using (optional).

-

- 3. Scoop tablespoonfuls of the dough onto the lined baking sheet (a cookie scoop is the fastest way). Form into rounded biscuit shapes (flatten slightly with your fingers).

-

- 4. Bake for about [15 minutes](#), until firm and golden. Cool on the baking sheet.

- - Readers Also Made These Similar Recipes

-- [](https://www.wholesomeyum.com/recipes/low-carb-almond-flour-biscotti-paleo-sugar-free/)

-- [](https://www.wholesomeyum.com/recipes/low-carb-brownies-with-almond-butter-paleo-gluten-free/)

-- [

-

- Rate this recipe:

-

- [](#respond)

-- Low Carb Paleo Keto Almond Flour Biscuits Recipe - 4 Ingredients

-

- This paleo almond flour biscuits recipe needs just 4 common ingredients & 10 minutes prep. These buttery low carb keto biscuits will become your favorite!

-

- Prep Time 10 minutes

-

- Cook Time 15 minutes

-

- Total Time 25 minutes

- - Recipe Video

-

- *Click or tap on the image below to play the video. It's the easiest way to learn how to make this recipe!*

- - Ingredients

-

- *Click underlined ingredients to see where to get them.*

- Please ensure Safari reader mode is OFF to view ingredients.

-

- [](https://www.wholesomeyumfoods.com/product-category/flours/)

-- Get The Best Flour For This Recipe

-

- Meet Wholesome Yum Blanched Almond Flour and Coconut Flour, with the highest quality + super fine texture, for the best tasting baked goods.

-

- [GET FLOURS](https://www.wholesomeyumfoods.com/product-category/flours/)

- - Instructions

-

- **Get RECIPE TIPS in the post above, nutrition info + recipe notes below!**

-

- *Click on the times in the instructions below to start a kitchen timer while you cook.*

-

- 1. Preheat the oven to 350 degrees F (177 degrees C). Line a baking sheet with parchment paper.

-

- 2. Mix dry almond flour, baking powder, and sea salt together in a large bowl. Stir in whisked egg, melted butter, and sour cream, if using (optional).

-

- 3. Scoop tablespoonfuls of the dough onto the lined baking sheet (a cookie scoop is the fastest way). Form into rounded biscuit shapes (flatten slightly with your fingers).

-

- 4. Bake for about [15 minutes](#), until firm and golden. Cool on the baking sheet.

- - Readers Also Made These Similar Recipes

-- [](https://www.wholesomeyum.com/recipes/low-carb-almond-flour-biscotti-paleo-sugar-free/)

-- [](https://www.wholesomeyum.com/recipes/low-carb-brownies-with-almond-butter-paleo-gluten-free/)

-- [ ](https://www.wholesomeyum.com/recipes/easy-crab-cakes/)

-- [](https://www.wholesomeyum.com/recipes/low-carb-bread-recipe-almond-flour-bread-paleo-gluten-free/)

- - Recipe Notes

-

- **Serving size:** 1 biscuit

-

- Nutrition info does not include optional sour cream. Carb count is about the same either way.

- - Video Showing How To Make Paleo Almond Flour Biscuits:

-

- *Don't miss the VIDEO above - it's the easiest way to learn how to make Paleo Almond Flour Biscuits!*

- - Nutrition Information Per Serving

-

- Nutrition Facts

-

- Amount per serving. Serving size in recipe notes above.

-

- Calories 164

-

- Fat 15g

-

- Protein 5g

-

- Total Carbs 4g

-

- Net Carbs 2g

-

- Fiber 2g

-

- Sugar 1g

-

- **Where does nutrition info come from?** Nutrition facts are provided as a courtesy, sourced from the USDA Food Database. You can find individual ingredient carb counts we use in the [Low Carb & Keto Food List](https://www.wholesomeyum.com/low-carb-keto-food-list/). Net carb count excludes fiber, erythritol, and allulose, because these do not affect blood sugar in most people. (Learn about [net carbs here](https://www.wholesomeyum.com/how-to-calculate-net-carbs/).) We try to be accurate, but feel free to make your own calculations.

- - Want to save this recipe?

-

- Create a free account to save your favourites recipes and articles!

-

- [Sign Up To Save Recipes](#)

-

- © Copyright Maya Krampf for Wholesome Yum. **Please DO NOT SCREENSHOT OR COPY/PASTE recipes** to social media or websites. We'd LOVE for you to share a link with photo instead. 🙂

-

-

\ No newline at end of file

diff --git a/pages/Recipes/Mojo pork.sync-conflict-20250817-085619-UULL5XD.md b/pages/Recipes/Mojo pork.sync-conflict-20250817-085619-UULL5XD.md

deleted file mode 100644

index c87f1a9..0000000

--- a/pages/Recipes/Mojo pork.sync-conflict-20250817-085619-UULL5XD.md

+++ /dev/null

@@ -1,6 +0,0 @@

----

-title: Mojo pork

-date: 2024-01-24 21:26:47

-tags:

----

-https://www.recipetineats.com/juicy-cuban-mojo-pork-roast-chef-movie-recipe/

diff --git a/pages/Recipes/Pan - Recommended Cooking Temperatures.sync-conflict-20250817-085622-UULL5XD.md b/pages/Recipes/Pan - Recommended Cooking Temperatures.sync-conflict-20250817-085622-UULL5XD.md

deleted file mode 100644

index 2f239b0..0000000

--- a/pages/Recipes/Pan - Recommended Cooking Temperatures.sync-conflict-20250817-085622-UULL5XD.md

+++ /dev/null

@@ -1,85 +0,0 @@

----

-title: Pan - Recommended Cooking Temperatures

-updated: 2020-09-04 16:47:28Z

-created: 2020-09-04 16:34:54Z

----

-

-Pan - Recommended Cooking Temperatures

-

-[HestanCue_RecommendedCookingTemperatures.pdf](../../_resources/HestanCue_RecommendedCookingTemperatures.pdf)

-

-[Hestan\_Cue\_-*Temperature\_Chart\_Fahrenheit*-_Printable.pdf](../../_resources/Hestan_Cue_-_Temperature_Chart_Fahrenheit_-_Printa.pdf)

-

-- 450

-

- HIGH

-- PROTEIN - QUICK SEAR, CHAR

-

- Sous Vide Meats, Crispy Skin Fish

- Great for crisping skin or developing crust. Typically you should sear for 1-2 minutes per side

- and then finish cooking at 350°F.

-- VEGETABLES - CHAR, BLISTER

-

- Broccoli, Green Beans

- To develop color while maintaining crunch, or develop crusts on vegetables that release water, like mushrooms.

-- 425

-- PROTEIN - SEAR, STIR-FRY

-

- Shrimp, Cubed Meats

- The ideal temperature to develop color and cook small proteins like scallops, shrimp or stir-fry.

-- VEGETABLES - STIR-FRY, PAN FRY

-

- Peppers, Carrots, Home Fries

- For cooking small (or small cut) vegetables and developing color.

-- 400

-- PROTEIN - BROWN, PAN FRY

-

- Pork Chops, Chicken

- An all-around temperature for cooking

- meat while developing color.

-- VEGETABLES - ROAST

-

- Asparagus, Corn (off the cob), Zucchini

- Great for quickly caramelizing produce high in natural sugars or cooking large (or large cut) vegetables.

-- 375

-- PROTEIN - BROWN, SHALLOW FRY

-

- Chicken Cutlet, Pan Fried Fish, Ground Meats

- The perfect temperature for gently frying

- breaded meats or browning butter.

-- VEGETABLES - BROWN, SHALLOW FRY

-

- Brussels Sprouts, Pancakes, Hash Browns, Crispy Eggplant

- Great for cooking tough vegetables, batters, or shallow frying breaded items and fritters.

-- 350

-- PROTEIN - PAN ROAST AFTER SEAR

-

- Steak, Lamb

- The sweet spot to finish your meat after a quick

- sear at a higher temperature.

-- VEGETABLES - SAUTE, TOAST

-

- Onions, Garlic, Peppers, Quesadilla

- The quintessential saute temperature. Also good for toasted sandwiches, like a crispy and gooey grilled cheese.

-- 325

-- PROTEIN - SCRAMBLED EGGS

-

- Scrambled, Country Omelettes

- Best with butter or non-stick cooking spray (not oil). We recommend no more than 4 eggs at a time.

-- 300

-- PROTEIN - RENDER

-

- Duck Breast, Pancetta

- To slowly draw out fat for use in other recipes or to

- make your meat extra crispy.

-- VEGETABLES - CARAMELIZE, SWEAT

-

- Onions, Garlic, Peppers

- The best temperature to gently cook and gradually develop color.

-

- #250

-- PROTEIN - FRIED EGGS

-

- Sunny Side Up, Over Easy, Egg White Omelettes

- For that elusive tender egg white, low temperature is key.

- Best with butter or non-stick cooking spray (not oil).

\ No newline at end of file

diff --git a/pages/Recipes/Pan Temperatures.sync-conflict-20250817-085619-UULL5XD.md b/pages/Recipes/Pan Temperatures.sync-conflict-20250817-085619-UULL5XD.md

deleted file mode 100644

index 939605d..0000000

--- a/pages/Recipes/Pan Temperatures.sync-conflict-20250817-085619-UULL5XD.md

+++ /dev/null

@@ -1,11 +0,0 @@

----

-title: Pan Temperatures

-updated: 2022-01-11 20:54:17Z

-created: 2020-05-10 14:56:46Z

----

-

-Low heat is 200° F to 300° F - for slow cooking and smoking.

-

-Medium heat is 300 ° F to 400 °F - for cooking chicken, vegetables, omelettes and pancakes, steaks or oil frying.

-

-High heat is 400° F to 600° F for searing meat.

diff --git a/pages/Recipes/Smoke point of cooking oils - Wikipedia.sync-conflict-20250817-085617-UULL5XD.md b/pages/Recipes/Smoke point of cooking oils - Wikipedia.sync-conflict-20250817-085617-UULL5XD.md

deleted file mode 100644

index 7104074..0000000

--- a/pages/Recipes/Smoke point of cooking oils - Wikipedia.sync-conflict-20250817-085617-UULL5XD.md

+++ /dev/null

@@ -1,79 +0,0 @@

----

-title: Smoke point of cooking oils - Wikipedia

-updated: 2022-01-11 20:55:16Z

-created: 2022-01-11 20:54:42Z

-source: https://en.wikipedia.org/wiki/Template:Smoke_point_of_cooking_oils

----

-

-From Wikipedia, the free encyclopedia

-

-

-| Fat | Quality | Smoke point[\[caution 1\]](#cite_note-1) | |

-| --- | --- | --- | --- |

-| [Almond oil](https://en.wikipedia.org/wiki/Almond \"Almond\") | | 221 °C | 430 °F[\[1\]](#cite_note-2) |

-| [Avocado oil](https://en.wikipedia.org/wiki/Avocado_oil \"Avocado oil\") | Refined | 270 °C | 520 °F[\[2\]](#cite_note-scott-3)[\[3\]](#cite_note-jonbarron.org-4) |

-| [Beef tallow](https://en.wikipedia.org/wiki/Tallow \"Tallow\") | | 250 °C | 480 °F |

-| [Butter](https://en.wikipedia.org/wiki/Butter \"Butter\") | | 150 °C | 302 °F[\[4\]](#cite_note-chef9-5) |

-| [Butter](https://en.wikipedia.org/wiki/Clarified_butter \"Clarified butter\") | Clarified | 250 °C | 482 °F[\[5\]](#cite_note-6) |

-| [Canola oil](https://en.wikipedia.org/wiki/Canola \"Canola\") | | 220–230 °C[\[6\]](#cite_note-FOOTNOTEVegetable_Oils_in_Food_Technology2011121-7) | 428–446 °F |

-| [Canola oil](https://en.wikipedia.org/wiki/Canola \"Canola\") ([Rapeseed](https://en.wikipedia.org/wiki/Rapeseed \"Rapeseed\")) | Expeller press | 190–232 °C | 375–450 °F[\[7\]](#cite_note-8) |

-| [Canola oil](https://en.wikipedia.org/wiki/Canola \"Canola\") ([Rapeseed](https://en.wikipedia.org/wiki/Rapeseed \"Rapeseed\")) | Refined | 204 °C | 400 °F |

-| [Canola oil](https://en.wikipedia.org/wiki/Canola \"Canola\") ([Rapeseed](https://en.wikipedia.org/wiki/Rapeseed \"Rapeseed\")) | Unrefined | 107 °C | 225 °F |

-| [Castor oil](https://en.wikipedia.org/wiki/Castor_oil \"Castor oil\") | Refined | 200 °C[\[8\]](#cite_note-detwiler-9) | 392 °F |

-| [Coconut oil](https://en.wikipedia.org/wiki/Coconut_oil \"Coconut oil\") | Refined, dry | 204 °C | 400 °F[\[9\]](#cite_note-nutiva1-10) |

-| [Coconut oil](https://en.wikipedia.org/wiki/Coconut_oil \"Coconut oil\") | Unrefined, dry expeller pressed, virgin | 177 °C | 350 °F[\[9\]](#cite_note-nutiva1-10) |

-| [Corn oil](https://en.wikipedia.org/wiki/Corn_oil \"Corn oil\") | | 230–238 °C[\[10\]](#cite_note-FOOTNOTEVegetable_Oils_in_Food_Technology2011284-11) | 446–460 °F |

-| [Corn oil](https://en.wikipedia.org/wiki/Corn_oil \"Corn oil\") | Unrefined | 178 °C[\[8\]](#cite_note-detwiler-9) | 352 °F |

-| [Cottonseed oil](https://en.wikipedia.org/wiki/Cottonseed_oil \"Cottonseed oil\") | Refined, bleached, deodorized | 220–230 °C[\[11\]](#cite_note-FOOTNOTEVegetable_Oils_in_Food_Technology2011214-12) | 428–446 °F |

-| [Flaxseed oil](https://en.wikipedia.org/wiki/Linseed_oil \"Linseed oil\") | Unrefined | 107 °C | 225 °F[\[3\]](#cite_note-jonbarron.org-4) |

-| [Grape seed oil](https://en.wikipedia.org/wiki/Grape_seed_oil \"Grape seed oil\") | | 216 °C | 421 °F |

-| [Lard](https://en.wikipedia.org/wiki/Lard \"Lard\") | | 190 °C | 374 °F[\[4\]](#cite_note-chef9-5) |

-| [Mustard oil](https://en.wikipedia.org/wiki/Mustard_oil \"Mustard oil\") | | 250 °C | 480 °F[\[12\]](#cite_note-13) |

-| [Olive oil](https://en.wikipedia.org/wiki/Olive_oil \"Olive oil\") | Refined | 199–243 °C | 390–470 °F[\[13\]](#cite_note-North_American_Olive_Oil_Association-14) |

-| [Olive oil](https://en.wikipedia.org/wiki/Olive_oil \"Olive oil\") | Virgin | 210 °C | 410 °F |

-| [Olive oil](https://en.wikipedia.org/wiki/Olive_oil \"Olive oil\") | Extra virgin, low acidity, high quality | 207 °C | 405 °F[\[3\]](#cite_note-jonbarron.org-4)[\[14\]](#cite_note-Gray2015-15) |

-| [Olive oil](https://en.wikipedia.org/wiki/Olive_oil \"Olive oil\") | Extra virgin | 190 °C | 374 °F[\[14\]](#cite_note-Gray2015-15) |

-| [Olive oil](https://en.wikipedia.org/wiki/Olive_oil \"Olive oil\") | Extra virgin | 160 °C | 320 °F[\[3\]](#cite_note-jonbarron.org-4) |

-| [Palm oil](https://en.wikipedia.org/wiki/Palm_oil \"Palm oil\") | Fractionated | 235 °C[\[15\]](#cite_note-16) | 455 °F |

-| [Peanut oil](https://en.wikipedia.org/wiki/Peanut_oil \"Peanut oil\") | Refined | 232 °C[\[3\]](#cite_note-jonbarron.org-4) | 450 °F |

-| [Peanut oil](https://en.wikipedia.org/wiki/Peanut_oil \"Peanut oil\") | | 227–229 °C[\[3\]](#cite_note-jonbarron.org-4)[\[16\]](#cite_note-FOOTNOTEVegetable_Oils_in_Food_Technology2011234-17) | 441–445 °F |

-| [Peanut oil](https://en.wikipedia.org/wiki/Peanut_oil \"Peanut oil\") | Unrefined | 160 °C[\[3\]](#cite_note-jonbarron.org-4) | 320 °F |

-| [Pecan oil](https://en.wikipedia.org/wiki/Pecan_oil \"Pecan oil\") | | 243 °C[\[17\]](#cite_note-18) | 470 °F |

-| [Rice bran oil](https://en.wikipedia.org/wiki/Rice_bran_oil \"Rice bran oil\") | Refined | 232 °C[\[18\]](#cite_note-FOOTNOTEVegetable_Oils_in_Food_Technology2011303-19) | 450 °F |

-| [Safflower oil](https://en.wikipedia.org/wiki/Safflower_oil \"Safflower oil\") | Unrefined | 107 °C | 225 °F[\[3\]](#cite_note-jonbarron.org-4) |

-| [Safflower oil](https://en.wikipedia.org/wiki/Safflower_oil \"Safflower oil\") | Semirefined | 160 °C | 320 °F[\[3\]](#cite_note-jonbarron.org-4) |

-| [Safflower oil](https://en.wikipedia.org/wiki/Safflower_oil \"Safflower oil\") | Refined | 266 °C | 510 °F[\[3\]](#cite_note-jonbarron.org-4) |

-| [Sesame oil](https://en.wikipedia.org/wiki/Sesame_oil \"Sesame oil\") | Unrefined | 177 °C | 350 °F[\[3\]](#cite_note-jonbarron.org-4) |

-| [Sesame oil](https://en.wikipedia.org/wiki/Sesame_oil \"Sesame oil\") | Semirefined | 232 °C | 450 °F[\[3\]](#cite_note-jonbarron.org-4) |

-| [Soybean oil](https://en.wikipedia.org/wiki/Soybean_oil \"Soybean oil\") | | 234 °C[\[19\]](#cite_note-FOOTNOTEVegetable_Oils_in_Food_Technology201192-20) | 453 °F |

-| [Sunflower oil](https://en.wikipedia.org/wiki/Sunflower_oil \"Sunflower oil\") | Neutralized, dewaxed, bleached & deodorized | 252–254 °C[\[20\]](#cite_note-FOOTNOTEVegetable_Oils_in_Food_Technology2011153-21) | 486–489 °F |

-| [Sunflower oil](https://en.wikipedia.org/wiki/Sunflower_oil \"Sunflower oil\") | Semirefined | 232 °C[\[3\]](#cite_note-jonbarron.org-4) | 450 °F |

-| [Sunflower oil](https://en.wikipedia.org/wiki/Sunflower_oil \"Sunflower oil\") | | 227 °C[\[3\]](#cite_note-jonbarron.org-4) | 441 °F |

-| [Sunflower oil](https://en.wikipedia.org/wiki/Sunflower_oil \"Sunflower oil\") | Unrefined, first cold-pressed, raw | 107 °C[\[21\]](#cite_note-22) | 225 °F |

-| [Sunflower oil, high oleic](https://en.wikipedia.org/wiki/Sunflower_oil \"Sunflower oil\") | Refined | 232 °C | 450 °F[\[3\]](#cite_note-jonbarron.org-4) |

-| [Sunflower oil, high oleic](https://en.wikipedia.org/wiki/Sunflower_oil \"Sunflower oil\") | Unrefined | 160 °C | 320 °F[\[3\]](#cite_note-jonbarron.org-4) |

-| Vegetable oil blend | Refined | 220 °C[\[14\]](#cite_note-Gray2015-15) | 428 °F |

-

-1. **[^](#cite_ref-1 \"Jump up\")** Specified smoke, fire, and flash points of any fat and oil can be misleading: they depend almost entirely upon the free fatty acid content, which increases during storage or use. The smoke point of fats and oils decreases when they are at least partially split into free fatty acids and glycerol; the glycerol portion decomposes to form acrolein, which is the major source of the smoke evolved from heated fats and oils. A partially hydrolyzed oil therefore smokes at a lower temperature than non-hydrolyzed oil. (Adapted from Gunstone, Frank, ed. Vegetable oils in food technology: composition, properties and uses. John Wiley & Sons, 2011.)

-

-1. **[^](#cite_ref-2 \"Jump up\")** Jacqueline B. Marcus (2013). [*Culinary Nutrition: The Science and Practice of Healthy Cooking*](https://books.google.com/books?id=p2j3v6ImcX0C&pg=PT73#v=onepage&q&f=false). Academic Press. p. 61. [ISBN](https://en.wikipedia.org/wiki/ISBN_%28identifier%29 \"ISBN (identifier)\") [978-012-391882-6](https://en.wikipedia.org/wiki/Special:BookSources/978-012-391882-6 \"Special:BookSources/978-012-391882-6\"). Table 2-3 Smoke Points of Common Fats and Oils.

-2. **[^](#cite_ref-scott_3-0 \"Jump up\")** [\"Smoking Points of Fats and Oils\"](http://whatscookingamerica.net/Information/CookingOilTypes.htm). *What’s Cooking America*.

-3. ^ [Jump up to: ***a***](#cite_ref-jonbarron.org_4-0) [***b***](#cite_ref-jonbarron.org_4-1) [***c***](#cite_ref-jonbarron.org_4-2) [***d***](#cite_ref-jonbarron.org_4-3) [***e***](#cite_ref-jonbarron.org_4-4) [***f***](#cite_ref-jonbarron.org_4-5) [***g***](#cite_ref-jonbarron.org_4-6) [***h***](#cite_ref-jonbarron.org_4-7) [***i***](#cite_ref-jonbarron.org_4-8) [***j***](#cite_ref-jonbarron.org_4-9) [***k***](#cite_ref-jonbarron.org_4-10) [***l***](#cite_ref-jonbarron.org_4-11) [***m***](#cite_ref-jonbarron.org_4-12) [***n***](#cite_ref-jonbarron.org_4-13) [***o***](#cite_ref-jonbarron.org_4-14) [***p***](#cite_ref-jonbarron.org_4-15) [\"Smoke Point of Oils\"](https://www.jonbarron.org/diet-and-nutrition/healthiest-cooking-oil-chart-smoke-points). *Baseline of Health*. Jonbarron.org. 2012-04-17. Retrieved 2019-12-26.

-4. ^ [Jump up to: ***a***](#cite_ref-chef9_5-0) [***b***](#cite_ref-chef9_5-1) [The Culinary Institute of America](https://en.wikipedia.org/wiki/The_Culinary_Institute_of_America \"The Culinary Institute of America\") (2011). The Professional Chef (9th ed.). Hoboken, New Jersey: John Wiley & Sons. [ISBN](https://en.wikipedia.org/wiki/ISBN_%28identifier%29 \"ISBN (identifier)\") [978-0-470-42135-2](https://en.wikipedia.org/wiki/Special:BookSources/978-0-470-42135-2 \"Special:BookSources/978-0-470-42135-2\"). OCLC 707248142.

-5. **[^](#cite_ref-6 \"Jump up\")** [\"Smoke Point of different Cooking Oils\"](http://chartsbin.com/view/1962). *Charts Bin*. 2011.

-6. **[^](#cite_ref-FOOTNOTEVegetable_Oils_in_Food_Technology2011121_7-0 \"Jump up\")** [Vegetable Oils in Food Technology (2011)](#CITEREFVegetable_Oils_in_Food_Technology2011), p. 121.

-7. **[^](#cite_ref-8 \"Jump up\")** [\"What is the \"truth\" about canola oil?\"](https://web-beta.archive.org/web/20110724001051/http://www.spectrumorganics.com/shared/faq.php?fqid=34). Spectrum Organics, Canola Oil Manufacturer. Archived from [the original](http://www.spectrumorganics.com/shared/faq.php?fqid=34) on July 24, 2011.

-8. ^ [Jump up to: ***a***](#cite_ref-detwiler_9-0) [***b***](#cite_ref-detwiler_9-1) Detwiler, S. B.; Markley, K. S. (1940). \"Smoke, flash, and fire points of soybean and other vegetable oils\". *Oil & Soap*. **17** (2): 39–40. [doi](https://en.wikipedia.org/wiki/Doi_%28identifier%29 \"Doi (identifier)\"):[10.1007/BF02543003](https://doi.org/10.1007%2FBF02543003).

-9. ^ [Jump up to: ***a***](#cite_ref-nutiva1_10-0) [***b***](#cite_ref-nutiva1_10-1) [\"Introducing Nutiva Organic Refined Coconut Oil\"](https://web.archive.org/web/20150214100025/http://nutiva.com/introducing-nutiva-refined-coconut-oil/). *Nutiva*. Archived from [the original](http://nutiva.com/introducing-nutiva-refined-coconut-oil/) on 2015-02-14.

-10. **[^](#cite_ref-FOOTNOTEVegetable_Oils_in_Food_Technology2011284_11-0 \"Jump up\")** [Vegetable Oils in Food Technology (2011)](#CITEREFVegetable_Oils_in_Food_Technology2011), p. 284.

-11. **[^](#cite_ref-FOOTNOTEVegetable_Oils_in_Food_Technology2011214_12-0 \"Jump up\")** [Vegetable Oils in Food Technology (2011)](#CITEREFVegetable_Oils_in_Food_Technology2011), p. 214.

-12. **[^](#cite_ref-13 \"Jump up\")** [\"Mustard Seed Oil\"](http://www.clovegarden.com/ingred/oi_mustz.html). *Clovegarden*.

-13. **[^](#cite_ref-North_American_Olive_Oil_Association_14-0 \"Jump up\")** [\"Olive Oil Smoke Point\"](http://blog.aboutoliveoil.org/olive-oil-smoke-point). Retrieved 2016-08-25.

-14. ^ [Jump up to: ***a***](#cite_ref-Gray2015_15-0) [***b***](#cite_ref-Gray2015_15-1) [***c***](#cite_ref-Gray2015_15-2) Gray, S (June 2015). [\"Cooking with extra virgin olive oil\"](http://acnem.org/members/journals/ACNEM_Journal_June_2015.pdf) (PDF). *ACNEM Journal*. **34** (2): 8–12.

-15. **[^](#cite_ref-16 \"Jump up\")** (in Italian) [Scheda tecnica dell'olio di palma bifrazionato PO 64](http://www.oleificiosperoni.it/schede_tecniche/SCHEDA%20TECNICA%20PALMA%20PO64.pdf).

-16. **[^](#cite_ref-FOOTNOTEVegetable_Oils_in_Food_Technology2011234_17-0 \"Jump up\")** [Vegetable Oils in Food Technology (2011)](#CITEREFVegetable_Oils_in_Food_Technology2011), p. 234.

-17. **[^](#cite_ref-18 \"Jump up\")** Ranalli N, Andres SC, Califano AN (Jul 2017). [\"Dulce de leche‐like product enriched with emulsified pecan oil: Assessment of physicochemical characteristics, quality attributes, and shelf‐life\"](https://onlinelibrary.wiley.com/doi/abs/10.1002/ejlt.201600377). *European Journal of Lipid Science and Technology*. [doi](https://en.wikipedia.org/wiki/Doi_%28identifier%29 \"Doi (identifier)\"):[10.1002/ejlt.201600377](https://doi.org/10.1002%2Fejlt.201600377). Retrieved 15 January 2021.

-18. **[^](#cite_ref-FOOTNOTEVegetable_Oils_in_Food_Technology2011303_19-0 \"Jump up\")** [Vegetable Oils in Food Technology (2011)](#CITEREFVegetable_Oils_in_Food_Technology2011), p. 303.

-19. **[^](#cite_ref-FOOTNOTEVegetable_Oils_in_Food_Technology201192_20-0 \"Jump up\")** [Vegetable Oils in Food Technology (2011)](#CITEREFVegetable_Oils_in_Food_Technology2011), p. 92.

-20. **[^](#cite_ref-FOOTNOTEVegetable_Oils_in_Food_Technology2011153_21-0 \"Jump up\")** [Vegetable Oils in Food Technology (2011)](#CITEREFVegetable_Oils_in_Food_Technology2011), p. 153.

-21. **[^](#cite_ref-22 \"Jump up\")** [\"Organic unrefined sunflower oil\"](https://www.maisonorphee.com/en/unrefined-sunflower-oil/). Retrieved 18 December 2016.

diff --git a/pages/Revelation Space.sync-conflict-20250817-085627-UULL5XD.md b/pages/Revelation Space.sync-conflict-20250817-085627-UULL5XD.md

deleted file mode 100644

index 5bad394..0000000

--- a/pages/Revelation Space.sync-conflict-20250817-085627-UULL5XD.md

+++ /dev/null

@@ -1,12 +0,0 @@

----

-tags: [[books]]

----

-

-- #Quotes

- - id:: 65bcfaf1-6ce0-4d92-9e39-fce3a88a4061

- > You 'know where you are' with a good totalitarian regime

- - id:: 65bcfaf1-9aa5-4051-aa91-92db7574b544

- > Now that he was dead the role had a certain potentiality, like a vacant throne.

- - id:: 65bd4c04-6e07-4a56-b12d-0e58d0e2581b

- > ‘You can hardly blame them.’

- > ‘Assuming stupidity is an inherited trait, then no, I can’t.’

\ No newline at end of file

diff --git a/pages/Running.sync-conflict-20250817-085616-UULL5XD.md b/pages/Running.sync-conflict-20250817-085616-UULL5XD.md

deleted file mode 100644

index 6d82ab8..0000000

--- a/pages/Running.sync-conflict-20250817-085616-UULL5XD.md

+++ /dev/null

@@ -1,14 +0,0 @@

-- Norwegian Method [[Running]]

- title:: Running

- - https://marathonhandbook.com/norwegian-method/

- - **The Norwegian method of endurance training, also called the Norwegian model of endurance training, is an approach to facilitating physiological adaptations to your cardiovascular system, metabolism, and muscles to support improved aerobic endurance performance.**

- - **Training is separated into three zones:

- - Zone 1 is the low-intensity zone

- - Zone 2 is the sub-threshold zone

- - and Zone 3 is above your [lactate threshold](https://marathonhandbook.com/lactate-threshold-training/).

- - Zone 2 is the target zone for the key workouts, which involves performing intervals at or slightly below your anaerobic threshold or lactate threshold.

- - **Zone 1** primarily targets the aerobic energy system, also known as the oxidative energy system, because energy is created in the presence of oxygen.

- - **Zones 2 and 3** both target the anaerobic energy systems.

- - allmark characteristic of the Norwegian model of endurance training is using a ^^high-volume, low-intensity approach^^ while prioritizing lactate-guided threshold interval training

--

--

\ No newline at end of file

diff --git a/pages/Scripts/Automatic Backups for WSL2 – Stephen's Thoughts.sync-conflict-20250817-085630-UULL5XD.md b/pages/Scripts/Automatic Backups for WSL2 – Stephen's Thoughts.sync-conflict-20250817-085630-UULL5XD.md

deleted file mode 100644

index 21eadd0..0000000

--- a/pages/Scripts/Automatic Backups for WSL2 – Stephen's Thoughts.sync-conflict-20250817-085630-UULL5XD.md

+++ /dev/null

@@ -1,96 +0,0 @@

----

-page-title: \"Automatic Backups for WSL2 – Stephen's Thoughts\"

-url: https://stephenreescarter.net/automatic-backups-for-wsl2/

-date: \"2023-03-13 15:12:29\"

----

-[Windows Subsystem for Linux](https://docs.microsoft.com/en-us/windows/wsl) (WSL) is pure awesome, and [WSL2](https://docs.microsoft.com/en-us/windows/wsl/wsl2-index) is even more awesome. (If you’ve never heard of it before, it’s Linux running inside Windows 10 – not as a virtual machine or emulator, but as a fully supported environment that shares the machine.) I’ve been testing WSL2 for a few months now as my local development environment. The performance benefits alone make it worth it. However, there is one problem with WSL2: **there isn’t a trivial way to do automatic backups.**

-

-In this tutorial, I will explain the difference between WSL1 and WSL2, and how you can set up automatic backups of WSL2. This is the setup I use to backup all of my WSL2 instances, and it integrates nicely with an offsite backup tool like Backblaze, to ensure I never lose an important file.

-

-- The Difference Between WSL1 and WSL2

-

- Under WSL1, the Linux filesystem is stored as plain files within the Windows 10 filesystem. As an example, this is the path for my Pengwin WSL1 filesystem:

-

- Inside that directly you’ll find the usual Linux directories, such as `etc`, `home`, `root`, etc. This makes backing up WSL2 trivial. Your existing backup program can read the files in this directory and back them up when they change. It’s super simple and *just works*.

-

- *Important: **Do not modify the files in this directory, ever.** This can corrupt your WSL1 instance and lose your files. If you need to restore files from your backup, restore into a separate directory and manually restore back into WSL1 via other methods.*

-

- However, under WSL2 the Linux filesystem is wrapped up in a virtual hard disk (`VHDX`) file:

-

- Using a virtual hard disk in this way greatly enhances the file IO performance of WSL2, but it does mean you cannot access the files directly. Instead you have a single file, and in the case of my Pengwin install, it’s over 15GB! ([If you’re not careful, it’ll grow huge](https://stephenreescarter.net/how-to-shrink-a-wsl2-virtual-disk/)!)

-

- As such, unlike the trivial backups we get for WSL1, we cannot use the same trick for WSL2. Many backup tools explicitly ignore virtual disk files, and those that do try to back it up will have trouble tracking changes. It may also be in a locked/changing state when a backup snapshot tries to read it… ultimately, it’s just not going to end well.

-- My WSL2 Backup Solution

-

- My first idea for backing up WSL2 was to `rsync` my home directory onto Windows. It turns out this approach works really well!

-

- I use a command like this:

-

- The above command is wrapped this inside `~/backup.sh`, which makes it easy to call on demand – without needing to get the parameters and paths right each time. Additionally, I added some database backup logic, since I want my development databases backed up too. You’ll find my full `~/backup.sh` script (with other features) at the end of this post.

-

- This method works incredibly well for getting the files into Windows where my backup program can see them and back them up properly. **However, it is also a manual process.**

-

- Some users have suggested using cron within WSL2 to trigger periodic backups, however the cron still relies on WSL2 to be running. As a result, you’ll only have backups if your WSL2 is up when your backup schedule is configured to run. That means cron isn’t the most reliable solution. As an aside, I have also seen reports that cron doesn’t always run in WSL. Note, I haven’t tested this myself, so I don’t know the details (i.e. use at your own risk).

-- Automating WSL2 Backups

-

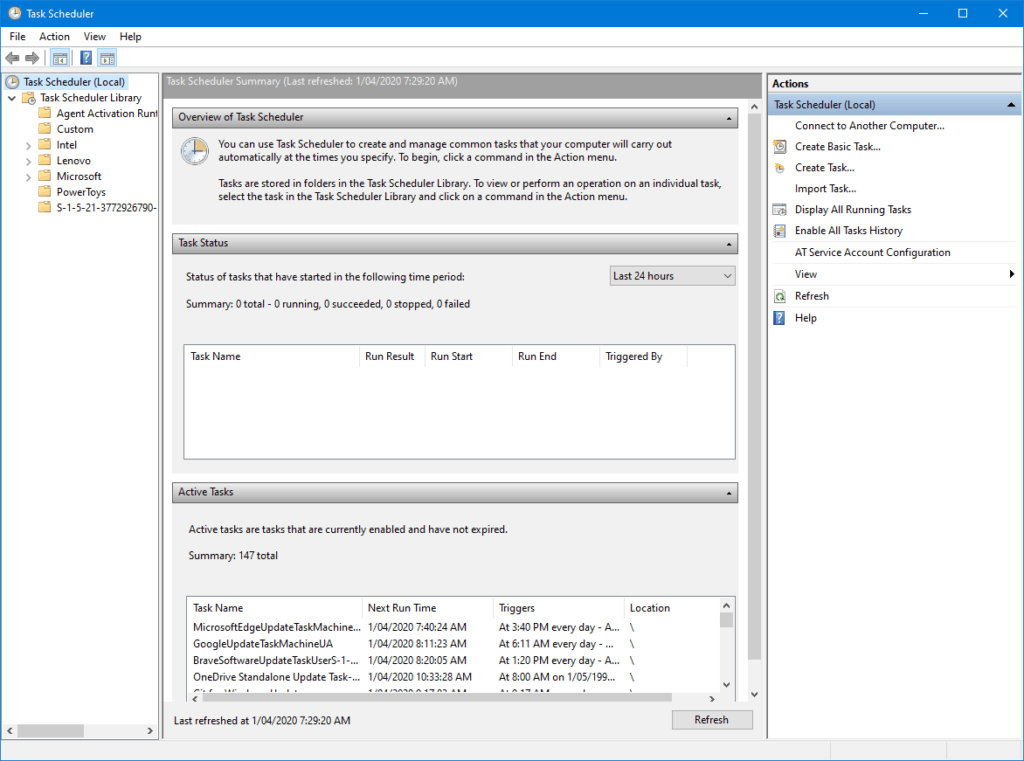

- After some creative searching, I discovered the **Windows Task Scheduler**. It’s the equivalent to *cron/crontab* on Linux, and allows you to schedule tasks under Windows. I had no idea such a thing existed, although, in hindsight, it seems pretty logical that it would. Using the *Task Scheduler*, we can set up automatic backups for WSL2.

-

- You can find it by searching for *Task Scheduler* in the start menu, or by looking in the *Windows Administrative Tools* folder.

-

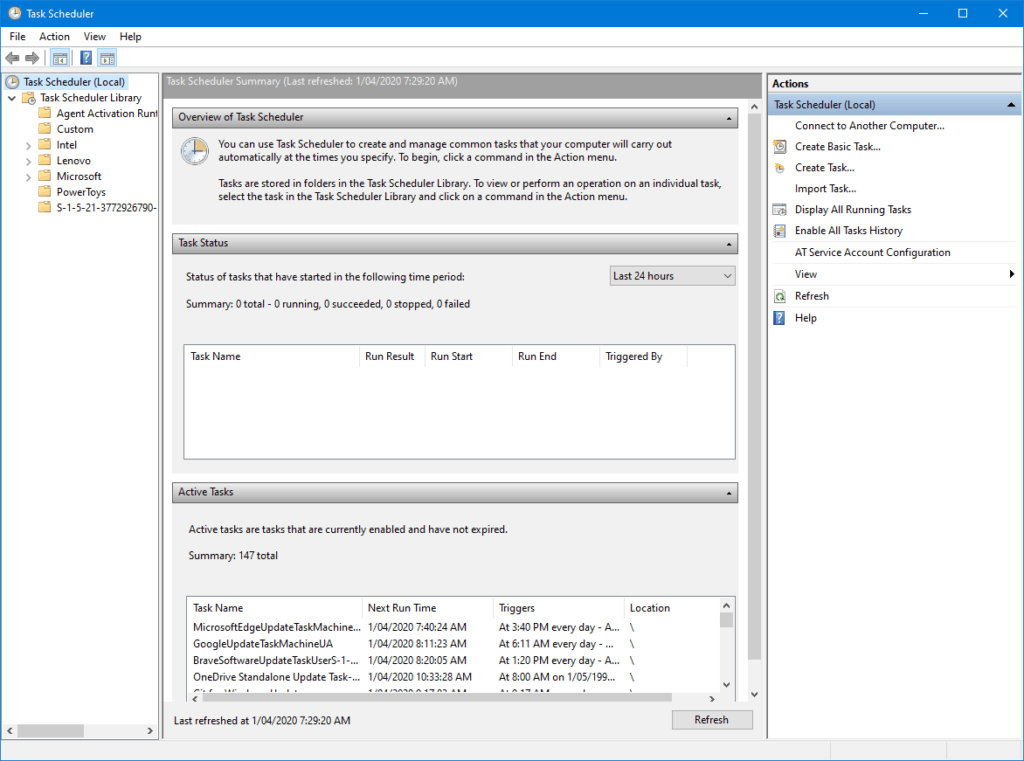

- Once it opens, you’ll see something that looks like this:

-

-

-

- Windows Task Scheduler

-

- With the Task Scheduler, we can tie our manual `rsync` based backup up to a schedule.

-

- To set up our automated backup, I’d recommend first going into the *Custom* folder in the left folder pane. It’ll keep your tasks organised and separate from the system tasks. From there you can select *Create Task…* in the actions list on the right.

-

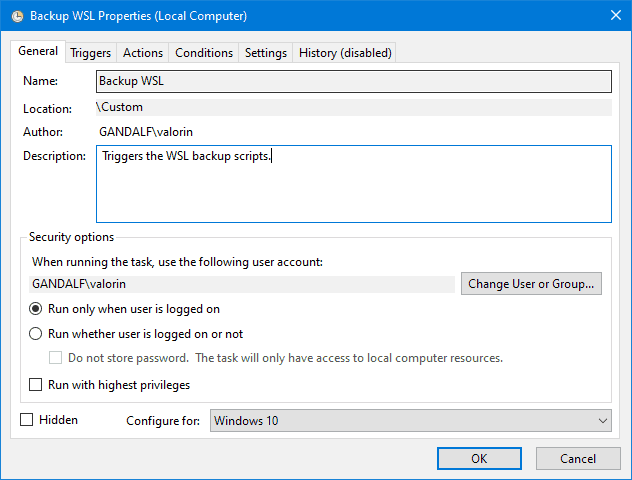

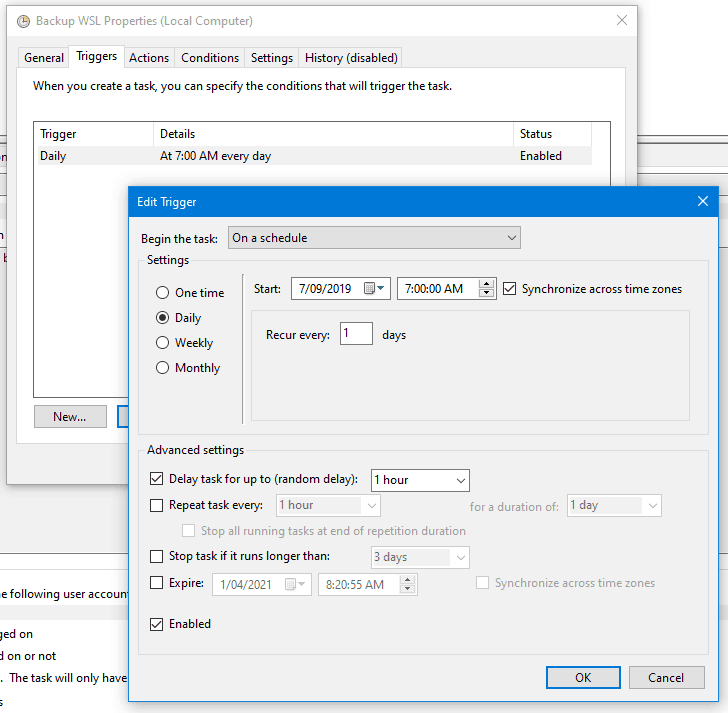

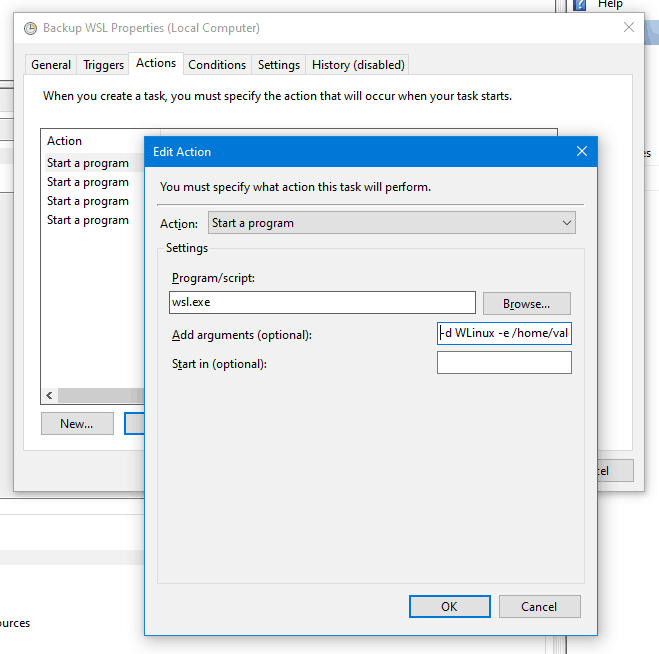

- The following screenshots show the configuration I use for my backups, customise as suits your needs. I’ll point out the settings that are important to get it working.

-

-

-

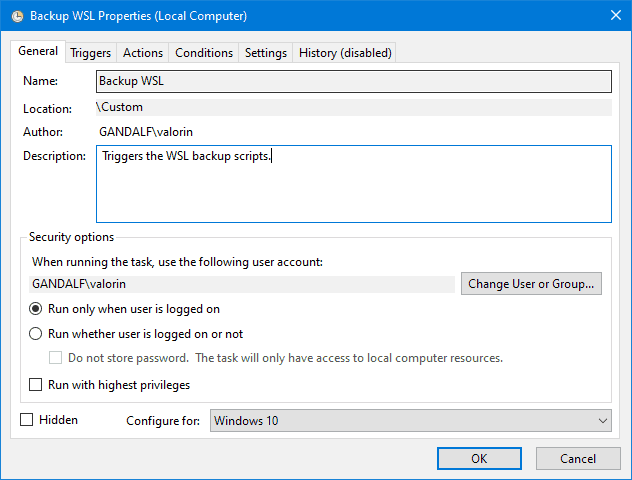

- Set *Configure For* to: `Windows 10`

-

-

-

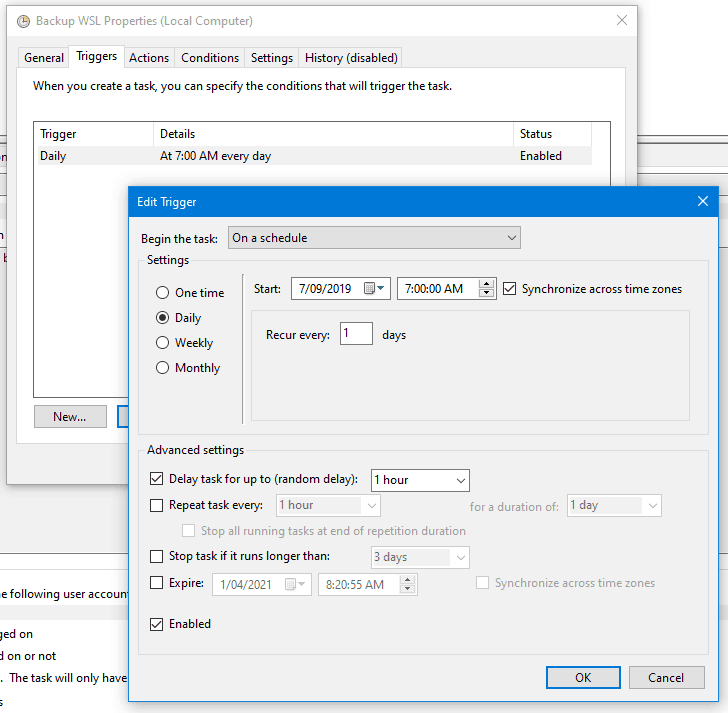

- Click `New` to create a new trigger, which will launch the backup. I have mine configured to run *daily* on a *schedule*, starting at at *random time* between *7am and 8am*. Don’t forget to check *Enabled* is ticked.

-

-

-

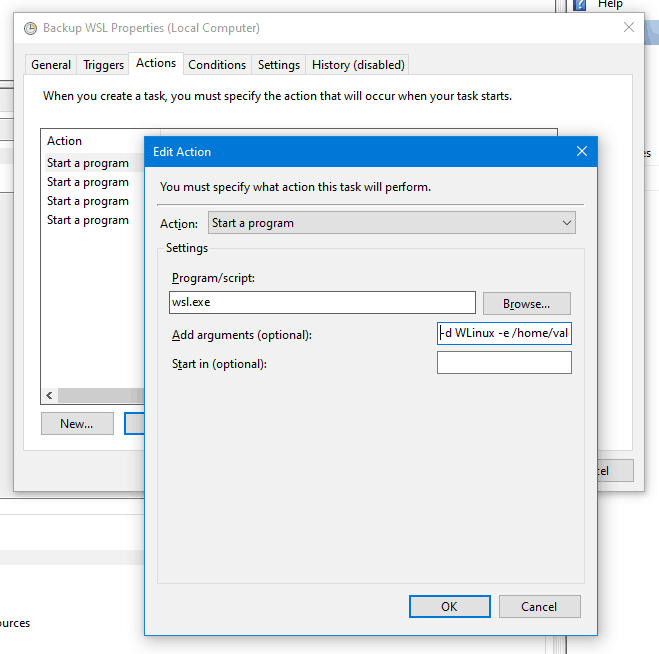

- Click `New` to create a new action, which is how the backup script is executed.

-

- Set *Program/script* to `wsl.exe`

- Set *Add arguments* to `-d WLinux -e /home/valorin/backup.sh`

-

- This executes WSL with the distribution `WLinux` (Pengwin), executing the script `/home/valorin/backup.sh`.

-

-

-

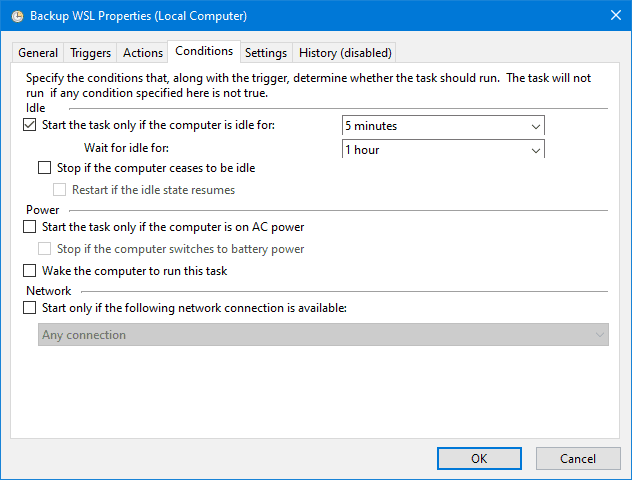

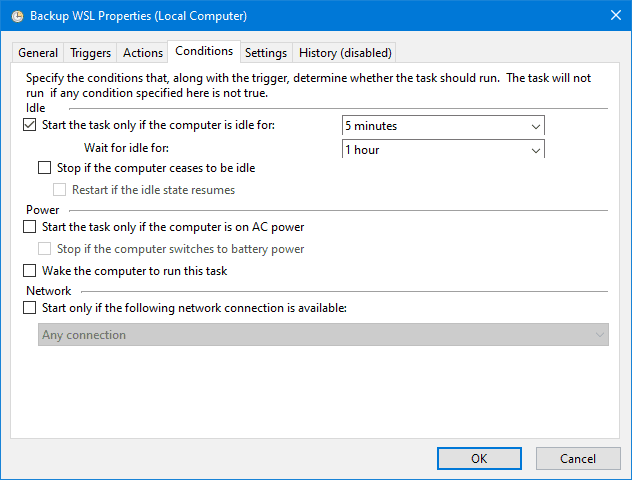

- You can control the special conditions when the backup script runs in this tab. Mine waits for the computer to be idle, but it is a laptop and the backup can sometimes slow everything down if there are some large files being backed up.

-

-

-

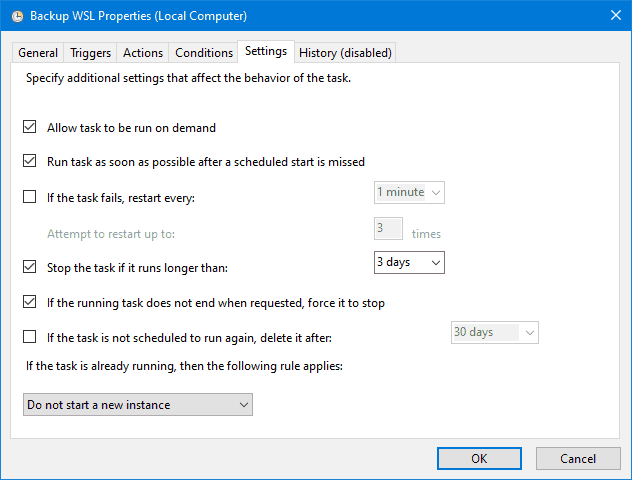

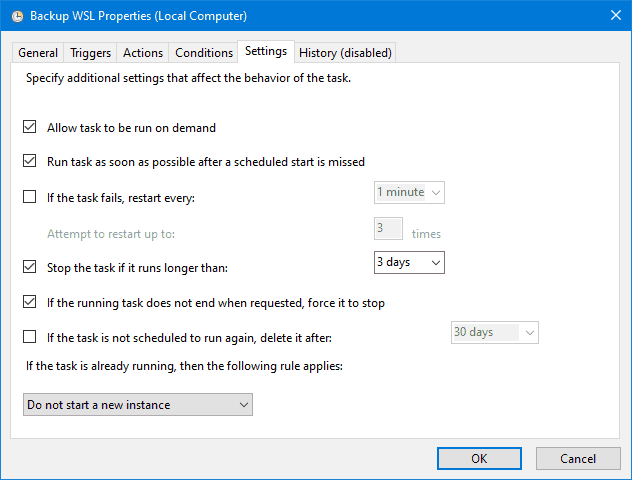

- You can configure the settings however suits you best.

-

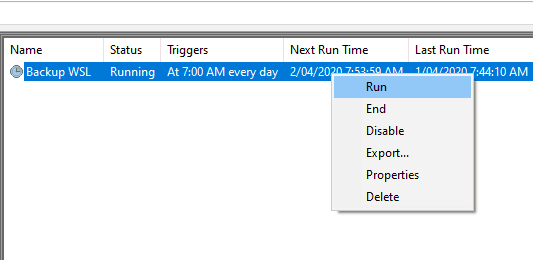

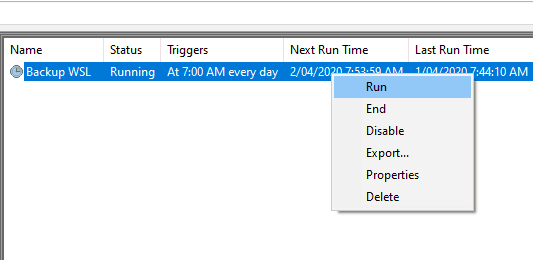

- That’s it, you now have automatic backups of WSL2. With the task fully configured, you should be able to wait for the schedule to run at the configured time. You can also right click on the task in the list and select `Run` to manually trigger the backup to check it works.

-

-

-- Backing Up MySQL/MariaDB

-

- If you have any databases (such as MySQL/MariaDB), you’ll probably want to keep a backup of that data as well. While you could get `rsync` to include the raw database files, that can easily result in corrupted data. So the alternative is to use a tool like `mysqldump` to dump the database data into a file. Once it’s in a file, you can easily include this in the `rsync` backup.

-

- For my daily backups, I use `mysqldump` to dump all of my current databases into their own files within my home directory. These files are then backed up by `rsync`into Windows alongside everything else. I’ve wrapped all of this up inside `~/backup.sh` , which I keep synchronised between my WSL2 instances.

-- My `~/backup.sh` Script

-

- This is the current version of my `~/backup.sh` script. It includes `mysqldump` for my development databases and `rsync` for my files. Since I use it across all my WSL instances, it uses the `WSL_DISTRO_NAME` environment variable to work across all of my WSL instances automatically.

-

- *Note, you’ll need to allow `sudo mysql` to work without a password to automate the script.*

-- Summary

-

- I’ve been using this backup method of automatic backups for WSL2 since I migrated over from WSL1 last year. It works really well with my workflow, and I usually don’t notice the backup window popping up every morning. It’s simple and minimal fuss, and doesn’t require any system changes to WSL2.

-

- If you do any work or keep any important files within your WSL2, you’ll want to ensure it’s backed up. Coupled with Backblaze, I have WSL2 backed up locally and online, keeping my dev work safe.

-

- I hope this has been helpful – please reach out if you have any questions about my approach. If you have a different approach to backing up WSL2, please share – I’d love to see how you solve it.

\ No newline at end of file

diff --git a/pages/Scripts/Configuring Consul DNS Forwarding in Ubuntu 16.04.sync-conflict-20250817-085617-UULL5XD.md b/pages/Scripts/Configuring Consul DNS Forwarding in Ubuntu 16.04.sync-conflict-20250817-085617-UULL5XD.md

deleted file mode 100644

index bc07195..0000000

--- a/pages/Scripts/Configuring Consul DNS Forwarding in Ubuntu 16.04.sync-conflict-20250817-085617-UULL5XD.md

+++ /dev/null

@@ -1,129 +0,0 @@

----

-title: Configuring Consul DNS Forwarding in Ubuntu 16.04

-updated: 2021-09-01 13:45:43Z

-created: 2021-09-01 13:45:43Z

-source: https://andydote.co.uk/2019/05/29/consul-dns-forwarding/

----

-

-- DEPRECATED - This doesn’t work properly

-

- [Please see this post for an updated version which works!](https://andydote.co.uk/2019/09/24/consul-ubuntu-dns-revisited/)

-

- * * *

-

- One of the advantages of using [Consul](https://www.consul.io/) for service discovery is that besides an HTTP API, you can also query it by DNS.

-

- The DNS server is listening on port `8600` by default, and you can query both A records or SRV records from it. [SRV](https://en.wikipedia.org/wiki/SRV_record) records are useful as they contain additional properties (`priority`, `weight` and `port`), and you can get multiple records back from a single query, letting you do load balancing client side:

-

- ```bash

- $ dig @localhost -p 8600 consul.service.consul SRV +short

-

- 1 10 8300 vagrant1.node.dc1.consul.

- 1 14 8300 vagrant2.node.dc1.consul.

- 2 100 8300 vagrant3.node.dc1.consul.

- ```

-

- A Records are also useful, as it means we should be able to treat services registered to Consul like any other domain - but it doesn’t work:

-

- ```bash

- $ curl http://consul.service.consul:8500

- curl: (6) Could not resolve host: consul.service.consul

- ```

-

- The reason for this is that the system’s built-in DNS resolver doesn’t know how to query Consul. We can, however, configure it to forward any `*.consul` requests to Consul.

-- [](#solution---forward-dns-queries-to-consul)Solution - Forward DNS queries to Consul

-

- As I usually target Ubuntu based machines, this means configuring `systemd-resolved` to forward to Consul. However, we want to keep Consul listening on it’s default port (`8600`), and `systemd-resolved` can only forward requests to port `53`, so we need also to configure `iptables` to redirect the requests.

-

- The steps are as follows:

-

- 1. Configure `systemd-resolved` to forward `.consul` TLD queries to the local consul agent

- 2. Configure `iptables` to redirect `53` to `8600`

-

- So let’s get to it!

- - [](#1-make-iptables-persistent)1\. Make iptables persistent

-

- IPTables configuration changes don’t persist through reboots, so the easiest way to solve this is with the `iptables-persistent` package.

-

- Typically I am scripting machines (using \[Packer\] or \[Vagrant\]), so I configure the install to be non-interactive:

-

- ```bash

- echo iptables-persistent iptables-persistent/autosave_v4 boolean false | sudo debconf-set-selections

- echo iptables-persistent iptables-persistent/autosave_v6 boolean false | sudo debconf-set-selections

-

- sudo DEBIAN_FRONTEND=noninteractive apt install -yq iptables-persistent

- ```

- - [](#2-update-systemd-resolved)2\. Update Systemd-Resolved

-

- The file to change is `/etc/systemd/resolved.conf`. By default it looks like this:

-

- ```bash

- [Resolve]

- #DNS=

- #FallbackDNS=8.8.8.8 8.8.4.4 2001:4860:4860::8888 2001:4860:4860::8844

- #Domains=

- #LLMNR=yes

- #DNSSEC=no

- ```

-

- We need to change the `DNS` and `Domains` lines - either editing the file by hand, or scripting a replacement with `sed`:

-

- ```bash

- sudo sed -i 's/#DNS=/DNS=127.0.0.1/g; s/#Domains=/Domains=~consul/g' /etc/systemd/resolved.conf

- ```

-

- The result of which is the file now reading like this:

-

- ```bash

- [Resolve]

- DNS=127.0.0.1

- #FallbackDNS=8.8.8.8 8.8.4.4 2001:4860:4860::8888 2001:4860:4860::8844 Domains=~consul

- #LLMNR=yes

- #DNSSEC=no

- ```

-

- By specifying the `Domains` as `~consul`, we are telling resolvd to forward requests for the `consul` TLD to the server specified in the `DNS` line.

- - [](#3-configure-resolvconf-too)3\. Configure Resolvconf too

-

- For compatibility with some applications (e.g. `curl` and `ping`), we also need to update `/etc/resolv.conf` to specify our local nameserver. You do this **not** by editing the file directly!

-

- Instead, we need to add `nameserver 127.0.0.1` to `/etc/resolvconf/resolv.conf.d/head`. Again, I will script this, and as we need `sudo` to write to the file, the easiest way is to use `tee` to append the line and then run `resolvconf -u` to apply the change:

-

- ```bash

- echo "nameserver 127.0.0.1" | sudo tee --append /etc/resolvconf/resolv.conf.d/head

- sudo resolvconf -u

- ```

- - [](#configure-iptables)Configure iptables

-

- Finally, we need to configure iptables so that when `systemd-resolved` sends a DNS query to localhost on port `53`, it gets redirected to port `8600`. We’ll do this for both TCP and UDP requests, and then use `netfilter-persistent` to make the rules persistent:

-

- ```bash

- sudo iptables -t nat -A OUTPUT -d localhost -p udp -m udp --dport 53 -j REDIRECT --to-ports 8600

- sudo iptables -t nat -A OUTPUT -d localhost -p tcp -m tcp --dport 53 -j REDIRECT --to-ports 8600

-

- sudo netfilter-persistent save

- ```

-- [](#verification)Verification

-

- First, we can test that both Consul and Systemd-Resolved return an address for a consul service:

-

- ```bash

- $ dig @localhost -p 8600 consul.service.consul +short

- 10.0.2.15

-

- $ dig @localhost consul.service.consul +short

- 10.0.2.15

- ```

-

- And now we can try using `curl` to verify that we can resolve consul domains and normal domains still:

-

- ```bash

- $ curl -s -o /dev/null -w "%{http_code}\n" http://consul.service.consul:8500/ui/

- 200

-

- $ curl -s -o /dev/null -w "%{http_code}\n" http://google.com

- 301

- ```

-- [](#end)End

-

- There are also guides available on how to do this on [Hashicorp’s website](https://learn.hashicorp.com/consul/security-networking/forwarding), covering other DNS resolvers too (such as BIND, Dnsmasq, Unbound).

\ No newline at end of file

diff --git a/pages/Scripts/DotFiles.sync-conflict-20250817-085623-UULL5XD.md b/pages/Scripts/DotFiles.sync-conflict-20250817-085623-UULL5XD.md

deleted file mode 100644

index 1a0e167..0000000

--- a/pages/Scripts/DotFiles.sync-conflict-20250817-085623-UULL5XD.md

+++ /dev/null

@@ -1,37 +0,0 @@

----

-title: DotFiles

-updated: 2021-08-04 14:37:25Z

-created: 2020-08-19 11:26:42Z

----

-

-[https://www.atlassian.com/git/tutorials/dotfiles](https://www.atlassian.com/git/tutorials/dotfiles)

-install git and git-lfs

-```bash

-apt install git git-lfs

-```

-

-setup the directory and alias

-```bash

-git init --bare $HOME/.cfg

-alias config='/usr/bin/git --git-dir=$HOME/.cfg/ --work-tree=$HOME'

-config config --local status.showUntrackedFiles no

-echo \"alias config='/usr/bin/git --git-dir=$HOME/.cfg/ --work-tree=$HOME'\" >> $HOME/.bashrc

-``````

-

-example of loading stuff into the repo

-```bash

-config status

-config add .vimrc

-config commit -m \"Add vimrc\"

-config add .bashrc

-config commit -m \"Add bashrc\"

-config push

-```

-

-init a new machine with the repo

-```bash

-alias config='/usr/bin/git --git-dir=$HOME/.cfg/ --work-tree=$HOME'

-echo \".cfg\" >> .gitignore

-git clone --bare git@github.com:sstent/dotfiles.git $HOME/.cfg

-config checkout

-```

diff --git a/pages/Scripts/Fix WSL to Hyperv network.sync-conflict-20250817-085609-UULL5XD.md b/pages/Scripts/Fix WSL to Hyperv network.sync-conflict-20250817-085609-UULL5XD.md

deleted file mode 100644

index e252e60..0000000

--- a/pages/Scripts/Fix WSL to Hyperv network.sync-conflict-20250817-085609-UULL5XD.md

+++ /dev/null

@@ -1,15 +0,0 @@

----

-title: Fix WSL to Hyperv network

-updated: 2023-02-08 17:09:10Z

-created: 2023-02-08 17:08:43Z

-latitude: 39.18360820

-longitude: -96.57166940

-altitude: 0.0000

----

-```powershell

-Get-NetIPInterface | where {$_.InterfaceAlias -eq 'vEthernet (WSL)'} | Select-Object ifIndex,InterfaceAlias,ConnectionState,Forwarding

-```

-

- ```powershell

- `Get-NetIPInterface | where {$_.InterfaceAlias -eq 'vEthernet (WSL)' -or $_.InterfaceAlias -eq 'vEthernet (Default Switch)'} | Set-NetIPInterface -Forwarding Enabled -Verbose`

- ```

diff --git a/pages/Scripts/How to Shrink a WSL2 Virtual Disk – SCRIPT.sync-conflict-20250817-085626-UULL5XD.md b/pages/Scripts/How to Shrink a WSL2 Virtual Disk – SCRIPT.sync-conflict-20250817-085626-UULL5XD.md

deleted file mode 100644

index 807fe84..0000000

--- a/pages/Scripts/How to Shrink a WSL2 Virtual Disk – SCRIPT.sync-conflict-20250817-085626-UULL5XD.md

+++ /dev/null

@@ -1,20 +0,0 @@

----

-title: How to Shrink a WSL2 Virtual Disk – SCRIPT

-updated: 2022-10-17 18:47:57Z

-created: 2022-10-17 18:47:57Z

-source: https://stephenreescarter.net/how-to-shrink-a-wsl2-virtual-disk/

----

-

-just put the in the flle scriptname.txt the lines

-

-```batch

-wsl.exe –terminate WSLinux

-wsl –shutdown

-select vdisk file=%appdata%\\..\\Local\\Local\\Packages\\SomeWSLVendorName\\LocalState\\ext4.vhdx

-compact vdisk

-exit

-```

-

-Create a task in task scheduler with this action

-

-`diskpart /s PathToScript\\scriptname.txt > logfile.txt`

diff --git a/pages/Scripts/Powershell - create tasks for FFS.sync-conflict-20250817-085608-UULL5XD.md b/pages/Scripts/Powershell - create tasks for FFS.sync-conflict-20250817-085608-UULL5XD.md

deleted file mode 100644

index 37a1a9c..0000000

--- a/pages/Scripts/Powershell - create tasks for FFS.sync-conflict-20250817-085608-UULL5XD.md

+++ /dev/null

@@ -1,27 +0,0 @@

----

-title: Powershell - create tasks for FFS

-updated: 2021-03-08 21:09:39Z

-created: 2021-03-08 21:09:23Z

----

-```powershell

-

-Get-ChildItem -Path c:\\Users\\sstent\\Documents\\*.ffs_batch | foreach {

-

-echo $_.FullName

-

-$DelayTimeSpan = New-TimeSpan -Hours 2

-

-$A = New-ScheduledTaskAction -Execute \"C:\\Program Files\\FreeFileSync\\FreeFileSync.exe\" -Argument $_.FullName

-

-$T = New-ScheduledTaskTrigger -Daily -At 7am -RandomDelay $DelayTimeSpan

-

-$P = New-ScheduledTaskPrincipal \"sstent\" -RunLevel Limited -LogonType Interactive

-

-$S = New-ScheduledTaskSettingsSet

-

-$D = New-ScheduledTask -Action $A -Principal $P -Trigger $T -Settings $S

-

-Register-ScheduledTask $_.Name -InputObject $D -TaskPath \"\\sync\\\"

-

-}

-```

diff --git a/pages/Scripts/Powershell - delete mepty dirs.sync-conflict-20250817-085631-UULL5XD.md b/pages/Scripts/Powershell - delete mepty dirs.sync-conflict-20250817-085631-UULL5XD.md

deleted file mode 100644

index 1b0f01d..0000000

--- a/pages/Scripts/Powershell - delete mepty dirs.sync-conflict-20250817-085631-UULL5XD.md

+++ /dev/null

@@ -1,38 +0,0 @@

----

-title: Poweshell - delete mepty dirs

-updated: 2022-01-30 16:39:35Z

-created: 2022-01-30 16:38:59Z

-latitude: 40.78200000

-longitude: -73.99530000

-altitude: 0.0000

----

-

-```powershell

-

-- Set to true to test the script

- $whatIf = $false

-- Remove hidden files, like thumbs.db

- $removeHiddenFiles = $true

-- Get hidden files or not. Depending on removeHiddenFiles setting

- $getHiddelFiles = !$removeHiddenFiles

-- Remove empty directories locally

- Function Delete-EmptyFolder($path)

- {

-- Go through each subfolder,

- Foreach ($subFolder in Get-ChildItem -Force -Literal $path -Directory)

- {

-- Call the function recursively

- Delete-EmptyFolder -path $subFolder.FullName

- }

-- Get all child items

- $subItems = Get-ChildItem -Force:$getHiddelFiles -LiteralPath $path

-- If there are no items, then we can delete the folder

-- Exluce folder: If (($subItems -eq $null) -and (-Not($path.contains("DfsrPrivate"))))

- If ($subItems -eq $null)

- {

- Write-Host "Removing empty folder '${path}'"

- Remove-Item -Force -Recurse:$removeHiddenFiles -LiteralPath $Path -WhatIf:$whatIf

- }

- }

-- Run the script

- Delete-EmptyFolder -path "G:\Old\"```

\ No newline at end of file

diff --git a/pages/Scripts/Powershell - get last boots.sync-conflict-20250817-085609-UULL5XD.md b/pages/Scripts/Powershell - get last boots.sync-conflict-20250817-085609-UULL5XD.md

deleted file mode 100644

index 2a49815..0000000

--- a/pages/Scripts/Powershell - get last boots.sync-conflict-20250817-085609-UULL5XD.md

+++ /dev/null

@@ -1,9 +0,0 @@

----

-title: Powershell - get last boots

-updated: 2021-03-24 14:55:35Z

-created: 2021-03-24 14:55:24Z

----

-```powershell

-

-Get-EventLog -LogName System |? {$_.EventID -in (6005,6006,6008,6009,1074,1076)} | ft TimeGenerated,EventId,Message -AutoSize -wrap

-```

diff --git a/pages/Scripts/Recursive Par2 creation.sync-conflict-20250817-085625-UULL5XD.md b/pages/Scripts/Recursive Par2 creation.sync-conflict-20250817-085625-UULL5XD.md

deleted file mode 100644

index c562fb3..0000000

--- a/pages/Scripts/Recursive Par2 creation.sync-conflict-20250817-085625-UULL5XD.md

+++ /dev/null

@@ -1,63 +0,0 @@

----

-title: Recursive Par2 creation

-updated: 2022-01-30 22:42:55Z

-created: 2022-01-30 22:42:30Z

----

-

-Recursive Par2 creation

-

-```

-

-@ECHO ON

-

-SETLOCAL

-

-REM check input path

-

-IF \"%~1\"==\"\" GOTO End

-

-IF NOT EXIST \"%~1\" (

-

-ECHO The path does not exist.

-

-GOTO End

-

-)

-

-IF NOT \"%~x1\"==\"\" (

-

-ECHO The path is not folder.

-

-GOTO End

-

-)

-

-REM set options for PAR2 client

-

-SET par2_path=\"C:\\Users\\stuar\\Downloads\\sstent\\AppData\\Local\\MultiPar\\par2j64.exe\"

-

-REM recursive search of subfolders

-

-PUSHD %1

-

-FOR /D /R %%G IN (*.*) DO CALL :ProcEach \"%%G\"

-

-POPD

-

-GOTO End

-

-REM run PAR2 client

-

-:ProcEach

-

-ECHO create for %1

-

-%par2_path% c /fo /sm2048 /rr5 /rd1 /rf3 /lc+32 \"%~1\\%~n1.par2\" *

-

-GOTO :EOF

-

-:End

-

-ENDLOCAL

-

-```

diff --git a/pages/Scripts/Script_ Re Run.sync-conflict-20250817-085615-UULL5XD.md b/pages/Scripts/Script_ Re Run.sync-conflict-20250817-085615-UULL5XD.md

deleted file mode 100644

index a7c4c14..0000000

--- a/pages/Scripts/Script_ Re Run.sync-conflict-20250817-085615-UULL5XD.md

+++ /dev/null

@@ -1,20 +0,0 @@

----

-title: 'Script: Re Run'

-updated: 2022-08-15 12:55:30Z

-created: 2022-08-15 12:55:30Z

-source: https://news.ycombinator.com/item?id=32467957

----

-

-I use this script, saved as \`rerun\`, to automatically re-execute a command whenever a file in the current directory changes:

-

-```bash

- #!/usr/bin/sh

-

- while true; do

- reset;

- \"$@\";

- inotifywait -e MODIFY --recursive .

- done

-```

-

-For example, if you invoke \`rerun make test\` then \`rerun\` will run \`make test\` whenever you save a file in your editor.

diff --git a/pages/Scripts/Single-file scripts that download their dependenci.sync-conflict-20250817-085613-UULL5XD.md b/pages/Scripts/Single-file scripts that download their dependenci.sync-conflict-20250817-085613-UULL5XD.md

deleted file mode 100644

index e2dedb3..0000000

--- a/pages/Scripts/Single-file scripts that download their dependenci.sync-conflict-20250817-085613-UULL5XD.md

+++ /dev/null

@@ -1,108 +0,0 @@

----

-title: Single-file scripts that download their dependencies · DBohdan.com

-updated: 2023-01-15 15:15:30Z

-created: 2023-01-15 15:15:30Z

-source: https://dbohdan.com/scripts-with-dependencies

----

-

-An ideal distributable script is fully contained in a single file. It runs on any compatible operating system with an appropriate language runtime. It is plain text, and you can copy and paste it. It does not require mucking about with a package manager, or several, to run. It does not conflict with other scripts’ packages or require managing a [project environment](https://docs.python.org/3/tutorial/venv.html) to avoid such conflicts.

-

-The classic way to get around all of these issues with scripts is to limit yourself to using the scripting language’s standard library. However, programmers writing scripts don’t want to; they want to use libraries that do not come with the language by default. Some scripting languages, runtimes, and environments resolve this conflict by offering a means to download and cache a script’s dependencies with just declarations in the script itself. This page lists such languages, runtimes, and environments. If you know more, [drop me a line](https://dbohdan.com/contact).

-

-- Contents

-- [Anything with a Nix package](#anything-with-a-nix-package)

-- [D](#d)

-- [Groovy](#groovy)

-- [JavaScript (Deno)](#javascript-deno)

-- [Kotlin (kscript)](#kotlin-kscript)

-- [Racket (Scripty)](#racket-scripty)

-- [Scala (Ammonite)](#scala-ammonite)

-- Anything with a Nix package

-

- The Nix package manager can [act as a `#!` interpreter](https://nixos.org/manual/nix/stable/command-ref/nix-shell.html#use-as-a--interpreter) and start another program with a list of dependencies available to it.

-

- ```

- #! /usr/bin/env nix-shell

- #! nix-shell -i python3 -p python3

- print("Hello, world!".rjust(20, "-"))

- ```

-- D

-

- D’s official package manager DUB supports [single-file packages](https://dub.pm/advanced_usage).

-

- ```

- #! /usr/bin/env dub

- /+ dub.sdl:

- name "foo"

- +/

- import std.range : padLeft;

- import std.stdio : writeln;

- void main() {

- writeln(padLeft("Hello, world!", '-', 20));

- }

- ```

-- Groovy

-

- Groovy comes with an embedded [JAR dependency manager](http://docs.groovy-lang.org/latest/html/documentation/grape.html).

-

- ```

- #! /usr/bin/env groovy

- @Grab(group='org.apache.commons', module='commons-lang3', version='3.12.0')

- import org.apache.commons.lang3.StringUtils

- println StringUtils.leftPad('Hello, world!', 20, '-')

- ```

-- JavaScript (Deno)

-

- [Deno](https://deno.land/) downloads dependencies like a browser. Deno 1.28 and later can also import from NPM packages. Current versions of Deno require you to pass a `run` argument to `deno`. One way to accomplish this from a script is with a form of [“exec magic”](https://wiki.tcl-lang.org/page/exec+magic). Here the magic is modified from a [comment](https://github.com/denoland/deno/issues/929#issuecomment-429004626) by Rafał Pocztarski.

-

- ```

- #! /bin/sh

- ":" //#; exec /usr/bin/env deno run "$0" "$@"

- import leftPad from "npm:left-pad";

- console.log(leftPad("Hello, world!", 20, "-"));

- ```

-

- On Linux systems with [recent GNU env(1)](https://coreutils.gnu.narkive.com/e0afmL4P/env-add-s-option-split-string-for-shebang-lines-in-scripts) and on [FreeBSD](https://www.freebsd.org/cgi/man.cgi?env) you can replace the magic with `env -S`.

-

- ```

- #! /usr/bin/env -S deno run

- import leftPad from "npm:left-pad";

- console.log(leftPad("Hello, world!", 20, "-"));

- ```

-- Kotlin (kscript)

-

- [kscript](https://github.com/holgerbrandl/kscript) is an unofficial scripting tool for Kotlin that understands several comment-based directives, including one for dependencies.

-

- ```

- #! /usr/bin/env kscript

- //DEPS org.apache.commons:commons-lang3:3.12.0

- import org.apache.commons.lang3.StringUtils

- println(StringUtils.leftPad("Hello, world!", 20, "-"))

- ```

-- Racket (Scripty)

-

- [Scripty](https://docs.racket-lang.org/scripty/) interactively prompts you to install the missing dependencies for a script in any Racket language.

-

- ```

- #! /usr/bin/env racket

- #lang scripty

- #:dependencies '("base" "typed-racket-lib" "left-pad")

- ------------------------------------------

- #lang typed/racket/base

- (require left-pad/typed)

- (displayln (left-pad "Hello, world!" 20 "-"))

- ```

-- Scala (Ammonite)

-

- The scripting environment in Ammonite lets you [import Ivy dependencies](https://ammonite.io/#IvyDependencies).

-

- ```

- #! /usr/bin/env amm

- import $ivy.`org.apache.commons:commons-lang3:3.12.0`,

- org.apache.commons.lang3.StringUtils

- println(StringUtils.leftPad("Hello, world!", 20, "-"))

- ```

-

- * * *

-

- Tags: [list](https://dbohdan.com/tags#list), [programming](https://dbohdan.com/tags#programming).

\ No newline at end of file

diff --git a/pages/Scripts/Small ODROID-XU4 (HC1) scripts ($2059139) · Snippets · Snippets · GitLab.sync-conflict-20250817-085605-UULL5XD.md b/pages/Scripts/Small ODROID-XU4 (HC1) scripts ($2059139) · Snippets · Snippets · GitLab.sync-conflict-20250817-085605-UULL5XD.md

deleted file mode 100644

index 602480d..0000000

--- a/pages/Scripts/Small ODROID-XU4 (HC1) scripts ($2059139) · Snippets · Snippets · GitLab.sync-conflict-20250817-085605-UULL5XD.md

+++ /dev/null

@@ -1,55 +0,0 @@

----

-page-title: \"Small ODROID-XU4 (HC1) scripts ($2059139) · Snippets · Snippets · GitLab\"

-url: https://gitlab.com/-/snippets/2059139

-date: \"2023-03-13 15:14:55\"

----

-[](https://gitlab.com/-/snippets/2059139/raw/master/Readme.md \"Open raw\")[](https://gitlab.com/-/snippets/2059139/raw/master/Readme.md?inline=false \"Download\")

-

-- Small ODROID-XU4 (HC1) scripts

-

- CPU underclocking, CPU clock reset and CPU temperature display.

-

- ```

- #!/usr/bin/env bash

-

- # Reset the XU4 (HC1) CPU clocks to default.

-

- set -euo pipefail

-

- # Auto-restart with sudo.

- if [[ $UID -ne 0 ]]; then

- sudo -p 'Restarting as root, password: ' bash $0 "$@"

- exit $?

- fi

-

- cpufreq-set -c 0 --max 1500000

- cpufreq-set -c 4 --max 2000000

- ```

-

- ```

- #!/usr/bin/env bash

-

- # Monitor the XU4 (HC1) CPU temperature.

-

- set -euo pipefail

-

- # thermal_zone2 seems to be the highest most of the time.

- while [ true ]; do awk '{printf "\r%3.1f°C", $1/1000}' /sys/class/thermal/thermal_zone2/temp; sleep 1; done

- ```

-

- ```

- #!/usr/bin/env bash

-

- # Underclock the XU4 (HC1) CPU.

-

- set -euo pipefail

-

- # Auto-restart with sudo.

- if [[ $UID -ne 0 ]]; then

- sudo -p 'Restarting as root, password: ' bash $0 "$@"

- exit $?

- fi

-

- cpufreq-set -c 0 --max 1000000

- cpufreq-set -c 4 --max 1000000

- ```

\ No newline at end of file

diff --git a/pages/Scripts/Windows 11 - autologin setup.sync-conflict-20250817-085623-UULL5XD.md b/pages/Scripts/Windows 11 - autologin setup.sync-conflict-20250817-085623-UULL5XD.md

deleted file mode 100644

index 0c30c5c..0000000

--- a/pages/Scripts/Windows 11 - autologin setup.sync-conflict-20250817-085623-UULL5XD.md

+++ /dev/null

@@ -1,9 +0,0 @@

-

-

-- start > type netplwiz

-- run netplwiz

-- click "Users must enter a user name and password to use this computer"

- - tick/untick even if unticked

-- click apply

- - local user name will be shown

- - enter "microsoft.com" password

\ No newline at end of file

diff --git a/pages/Scripts/choco.sync-conflict-20250817-085625-UULL5XD.md b/pages/Scripts/choco.sync-conflict-20250817-085625-UULL5XD.md

deleted file mode 100644

index 30242e8..0000000

--- a/pages/Scripts/choco.sync-conflict-20250817-085625-UULL5XD.md

+++ /dev/null

@@ -1,66 +0,0 @@

----

-title: choco

-updated: 2020-08-19 20:24:55Z

-created: 2020-08-19 12:44:22Z

----

-

-https://chocolatey.org/docs/helpers-install-chocolatey-zip-package

-

-

-Chocolatey v0.10.15

-adobereader 2020.012.20041

-autohotkey 1.1.33.02

-autohotkey.install 1.1.33.02

-calibre 4.22.0

-camstudio 2.7.316.20161004

-chocolatey 0.10.15

-chocolatey-core.extension 1.3.5.1

-darktable 3.0.2

-DotNet4.5.2 4.5.2.20140902

-emacs 26.3.0.20191219

-filezilla 3.49.1

-Firefox 79.0.0.20200817

-git 2.28.0

-git.install 2.28.0

-github 3.2.0.20181119

-github-desktop 2.5.3

-GoogleChrome 84.0.4147.135

-greenshot 1.2.10.6

-gsudo 0.7.0

-hugin 2019.2.0

-hugin.install 2019.2.0

-joplin 1.0.233

-KB2919355 1.0.20160915

-KB2919442 1.0.20160915

-keepass 2.45

-keepass-keeagent 0.8.1.20180426

-keepass-keepasshttp 1.8.4.220170629

-keepass-plugin-keeagent 0.12.0

-keepass.install 2.45

-krita 4.3.0

-mpc-hc 1.7.13.20180702

-openssh 8.0.0.1

-putty 0.74

-putty.portable 0.74

-rawtherapee 5.8

-spacemacs 0.200.13

-sublimetext3 3.2.2

-SublimeText3.PackageControl 2.0.0.20140915

-sumatrapdf 3.2

-sumatrapdf.commandline 3.2

-sumatrapdf.install 3.2

-sumatrapdf.portable 3.2

-tightvnc 2.8.27

-vcredist2010 10.0.40219.2

-vcxsrv 1.20.8.1

-visualstudiocode 1.23.1.20180730

-vlc 3.0.11

-voicemeeter 1.0.7.3

-voicemeeter.install 1.0.7.3

-vscode 1.48.0

-vscode.install 1.48.0

-windirstat 1.1.2.20161210

-xnviewmp 0.96.5

-xnviewmp.install 0.96.5

-youtube-dl 2020.07.28

-55 packages installed.

diff --git a/pages/Scripts/powerpoint VBA - copy comments.sync-conflict-20250817-085612-UULL5XD.md b/pages/Scripts/powerpoint VBA - copy comments.sync-conflict-20250817-085612-UULL5XD.md

deleted file mode 100644

index 76c7610..0000000

--- a/pages/Scripts/powerpoint VBA - copy comments.sync-conflict-20250817-085612-UULL5XD.md

+++ /dev/null

@@ -1,40 +0,0 @@

----

-title: powerpoint VBA - copy comments

-updated: 2022-06-14 13:38:24Z

-created: 2022-06-14 13:37:55Z

-latitude: 40.75891113

-longitude: -73.97901917

-altitude: 0.0000

----

-

-```vb

-Sub CopyComments()

- Dim lFromSlide As Long

- Dim lToSlide As Long

-

- lFromSlide = InputBox(\"Copy comments from slide:\", \"SLIDE\")

- lToSlide = InputBox(\"Copy comments to slide:\", \"SLIDE\")

-

- Dim oFromSlide As Slide

- Dim oToSlide As Slide

-

- Set oFromSlide = ActivePresentation.Slides(lFromSlide)

- Set oToSlide = ActivePresentation.Slides(lToSlide)

-

- Dim oSource As Comment

- Dim oTarget As Comment

-

- For Each oSource In oFromSlide.Comments

-

- Set oTarget = _

- ActivePresentation.Slides(lToSlide).Comments.Add( _

- oSource.Left, _

- oSource.Top, _

- oSource.Author, _

- oSource.AuthorInitials, _

- oSource.Text)

-

- Next oSource

-

-End Sub

-```

diff --git a/pages/Scripts/update kernel HC1.sync-conflict-20250817-085619-UULL5XD.md b/pages/Scripts/update kernel HC1.sync-conflict-20250817-085619-UULL5XD.md

deleted file mode 100644

index 114ad3e..0000000

--- a/pages/Scripts/update kernel HC1.sync-conflict-20250817-085619-UULL5XD.md

+++ /dev/null

@@ -1,15 +0,0 @@

----

-title: update kernel HC1

-updated: 2021-09-30 00:45:49Z

-created: 2021-09-22 16:57:09Z

-latitude: 40.78200000

-longitude: -73.99530000

-altitude: 0.0000

----

-```bash

- grep UUID /media/boot/boot.ini*

- apt update

- apt upgrade -V linux-odroid-5422

- blkid; grep UUID /media/boot/boot.ini*

- reboot

-```

diff --git a/pages/Secom.sync-conflict-20250817-085615-UULL5XD.md b/pages/Secom.sync-conflict-20250817-085615-UULL5XD.md

deleted file mode 100644

index 9f10df5..0000000

--- a/pages/Secom.sync-conflict-20250817-085615-UULL5XD.md

+++ /dev/null

@@ -1,25 +0,0 @@

-- https://rndwiki-pro.its.hpecorp.net/display/HCSS/SECOM

--

-- [[Oct 14th, 2024]]

- -

- -

- -

--

--

-- Services funding coming from [[Val]]

--

-- [[Tariq Kahn]]

- - Non-standard Stuff

- - Multi-tenant capability

- - "pricing"

- - "white label"ing? of console

- - want their logo

- - Veeam integration

- - Networking

- - multiple tier 1 NSX routers

- -

- -

- -

- -

- -

- -

\ No newline at end of file

diff --git a/pages/SelfAssessment.sync-conflict-20250817-085608-UULL5XD.md b/pages/SelfAssessment.sync-conflict-20250817-085608-UULL5XD.md

deleted file mode 100644

index dcf2ad1..0000000

--- a/pages/SelfAssessment.sync-conflict-20250817-085608-UULL5XD.md

+++ /dev/null

@@ -1,23 +0,0 @@

--

-- Bold goals - Describe the goals you achieved and the contributions and impact you delivered. Please include 2-3 examples.

- - [Complete intial deployments of @Equinix hardware , Ensure GA of PCE@Equinix program, Engage with customer opportunities to ensure successful deployments of PCE@Equinix] My primary focus for FY24 was delivering on the Private Cloud at Equinix and PCE at Equinix programs by completing the initial hardware deployments (for both the network infrastructure and the PCE pre-deployments), bringing the PCE at Equinix offer to GA, while also supporting the parallel PCBE and PCAI programs. Alongside these program efforts I have fielded and supported multiple sales enquiries with the aim of landing our first PC@Equinix deal. These items were all achieved and given the headwinds we faced going into FY24 I am particularly proud of this outcome.

- - [Foster new innovations] As a secondary focus, I looked to foster and encourage innovation in HPE and was very happy to be invited to be a reviewer for the TechCon summit again this year. This is always a very rewarding process and allows me an opportunity to use my technical background to provide guidance and feedback to our up and coming engineers.

-- HPE Beliefs - Describe how you demonstrated the (Obsess over the Customer, Demonstrate We Before I, and Exercise Healthy Impatience). Please include 2-3 examples.

- - Healthy Impatience - It's very easy, especially with internal customers, to not push as aggressively for internal goals as you do when external clients are driving the timeline. I strive to bring the timeliness and forward drive that I learned in my years as an consultant to external clients, and aim to deliver the same urgency and quality metrics to my internal clients. A good example of this was the ordering/build/deployment of the PCE Pre-Deployment units as part of the PCE@Equinix program - with no hard deadlines to work to, I maintained a healthily impatience driving the team to get the systems deployed as soon as possible.

- - Obsess over the Customer - I think this belief is tightly interlinked with Healthy Impatience, and forms part of providing a positive experience for both internal and external customers. One of the key lessons I learned from consulting is that to be able to apply this belief you truly need to understand the goals, motivations and external drivers of your clients and I always try to keep these external factors in mind when both advising and driving delivery for those clients (and partners). For example, one of the PC@Equinix marketables (M1617) required balancing our contractual obligations to Equinix, Equinix's desire to see a return on their investment and our internal engineering capacity - keeping all of these in mind helped me drive engineering progress whilst maintaining good standing with our partner Equinix.

-- My top 1-2 areas of strength are:

- - Strong technical background - numerous times over the course of the PC@Equnix and PCE@Equinix programs I've been able to leverage my technical background to better guide and 'sanity check' our engineering resources to drive the delivery of a better quality product. This skillset has also been very helpful when assisting our Equinix Product Management peers, who may not have the technical background/experience, to understand the technical answers (and reasoning) coming from out engineering teams. Finally, my background in the various hyperscaler offerings has be crucial is working through the internal compliance to resell Equinix Digital services to our end customers.

- - Balancing priorities - As part of my current role (and previous ones) there has been a strong need to balance often competing priorities (and external pressures) while ensuring that both high and low priority tasks get completed on time. This often requires thoughtful delegation, or leveraging technologies and systems to accelerate work where needed.

-- My top 1-2 development opportunities are:

- - I would like to find more opportunities to a) leverage my skillset to communicate technical concepts to less technical resources and be that bridge for communication, and b) find was to mentor junior resources

-- Include additional information about your contributions, progress against your Leadership Capability & Development Bold Goal, and your professional growth throughout the year.

- - I strongly believe that my ability to 'stay the course' regardless of the headwinds, distractions, competing priorities, road blocks and organizational shift has allowed my programs to achieve some measure of success in somewhat uncertain times.

--

--

-- BOLD GOALS

- - #### Complete intial deployments of @Equinix hardware

- - #### Ensure GA of PCE@Equinix program

- - Engage with customer opportunities to ensure successful deployments of PCE@Equinix

- - #### Foster new innovations

- - #### Attain TCP DT status

- - #### Return to a manager role

\ No newline at end of file

diff --git a/pages/Sep 1st, 2024.sync-conflict-20250817-085624-UULL5XD.md b/pages/Sep 1st, 2024.sync-conflict-20250817-085624-UULL5XD.md

deleted file mode 100644

index 704c380..0000000

--- a/pages/Sep 1st, 2024.sync-conflict-20250817-085624-UULL5XD.md

+++ /dev/null

@@ -1,3 +0,0 @@

-- [[workout]]

- - [[run]] 3mi

- - [[grippers]] 1: 3x

\ No newline at end of file

diff --git a/pages/SpellingBee.sync-conflict-20250817-085607-UULL5XD.md b/pages/SpellingBee.sync-conflict-20250817-085607-UULL5XD.md

deleted file mode 100644

index 3cf20d5..0000000

--- a/pages/SpellingBee.sync-conflict-20250817-085607-UULL5XD.md

+++ /dev/null

@@ -1 +0,0 @@

--

\ No newline at end of file

diff --git a/pages/TESTPDF.sync-conflict-20250817-085610-UULL5XD.md b/pages/TESTPDF.sync-conflict-20250817-085610-UULL5XD.md

deleted file mode 100644

index 18b8e07..0000000

--- a/pages/TESTPDF.sync-conflict-20250817-085610-UULL5XD.md

+++ /dev/null

@@ -1 +0,0 @@

--

\ No newline at end of file

diff --git a/pages/Tangles.sync-conflict-20250817-085616-UULL5XD.md b/pages/Tangles.sync-conflict-20250817-085616-UULL5XD.md

deleted file mode 100644

index 3d9ca98..0000000

--- a/pages/Tangles.sync-conflict-20250817-085616-UULL5XD.md

+++ /dev/null

@@ -1,19 +0,0 @@

-tags:: [[drawing]]

-

-- Various Zentangles that I've found

-- b-jazzy

- -

-- BeeLine

- -

-- CBEELINE

- -

- -

-- Jaz

- -

-- Sensono

- -

-- Sevens

- -

-- SweetPea

- -

- -

\ No newline at end of file

diff --git a/pages/TanuUpdate.sync-conflict-20251023-155538-UULL5XD.md b/pages/TanuUpdate.sync-conflict-20251023-155538-UULL5XD.md

deleted file mode 100644

index d471f13..0000000

--- a/pages/TanuUpdate.sync-conflict-20251023-155538-UULL5XD.md

+++ /dev/null

@@ -1,114 +0,0 @@

--

- - ISV - HSM

- - working with parissa and team on documenting workflow / certification rubrik

- - Simplivity

- - Karthik and I connected with Usman

- - NPI planned for November

- - Translations

- - Connected with GlobalLink team to understand the ML based translation services

- - Awaiting updated quotes

- - ALAs/Legal

- - escalated SBOM request

- - Secruity

- - Followed up on David Estes' comment re security review done as part of BD1

- - Awaiting feedback from Adam Lispcom

- - Tracy replied at they had not seen any of this documentation

- - Working with Sridhar on defining security SBOM requirements

-- Current Update

- id:: 684ad68e-ecb1-4ea7-bdc1-51e6f4f1980e

- - **ISV Strategy/Program**

- - Cheri Readout (ISV program)

- - reviewed deck to incl. HVM certifications

- - Rajeev working on getting time with Cheri

- - HVM - Self-valdiations

- - Reviewed smartsheet and existing documentation

- - Requests in flight:

- - SustainLake - internal development effort.

- - Monitoring progress - currently at mock up stage. Aiming for demo with dummy data as MVP

- - Schneider UPS - supplied contact details to schneider, followup [[06-13-2025]]

- - Commvault GTM - connected with Matt Wineberg - supplied content for their marketing materials

- - Storpool - partner developing plugin. Next step, get answers from engineering on their questions (previously blocked) [HPE - Morpheus tech questions.xlsx](https://hpe-my.sharepoint.com/:x:/r/personal/stuart_stent_hpe_com/Documents/Attachments/HPE%20-%20Morpheus%20tech%20questions.xlsx?d=wa7971487586644078649b15f76ba5eb8&csf=1&web=1&e=rCth6w)

- -

- - Veeam/Data Protection API

- - connected with Vish - met to review DataProtection API requirements this week

- - Vish to connect with David Estes to confirm engineering direction and document engineering stories

- - Call with Veeam on [[06-10-2025]]

- - Next Steps:

- - Follow-up with Rajeev on getting time with Cheri for readout

- - Update deck to incl info on current HVM process and state

- - HVM - Review processes - i.e. how are we onboarding/reviewing self-certs/etc

- - Working with Parissa and team on getting self-certification workflow fully defined (only test cases defined so far)

- id:: 684ad885-ffe1-4478-882e-c2507516b8a0

- - PCAI - Connect with team on "unleash AI" program

- - **Platform Security**

- - Working with Justin Shepard/Sridahr Bandi to document the stack components with gaps (missing hardening documentation/processes/etc)

- - incl. alignment to internal mandates and customer requests (infosight & PCE-D customers)

- - Working with Tommy to get Threat Analysis for Morpheus/HVM completed

- - **EULA/ALA - for Morpheus (incl PSL and MSP requirements)**

- - Combination VME/Morpheus draft completed and reviewed with VME team

- - Next steps:

- - Validate the SBOM is accurate (needs engineering resource)

- - Send to legal for review

- - **Service Desc**

- - Awaiting feedback from Alonzo and team

- - Next Step: Send to Donna for EFR review

- - **TekTalk - Delivered Thursday [[06-05-2025]]**

- - **Translation request**